- Direct Answer: What are the Key GPU Improvements?

- 1. The Precision Revolution: Blackwell & FP4 Tensor Cores

- 2. Memory Wall: Why HBM3e is the New Gold Standard

- 3. Intel Crescent Island: The High-Capacity Challenger

- 4. Local Inference: The RTX 5090 & Prosumer Power

- 5. Disaggregated Serving: Separating Prefill from Decode

- Frequently Asked Questions

The latest GPU architecture improvements for AI inference focus on precision efficiency and memory bandwidth. Key advancements include NVIDIA’s Blackwell architecture, which introduces FP4 quantization to double throughput without significant accuracy loss, and the integration of HBM3e memory to solve the bandwidth bottlenecks inherent in Large Language Models (LLMs). Additionally, new architectures support disaggregated inference, allowing systems to split the heavy “prefill” phase from the token-generating “decode” phase for maximum efficiency.

1. The Precision Revolution: Blackwell & FP4 Tensor Cores

For years, the standard for AI training and inference was FP16 (16-bit floating point) or BF16. However, as models ballooned to trillions of parameters, the computational cost became unsustainable. The most significant architectural improvement in 2025 is the hardware-level support for FP4 (4-bit floating point), pioneered by NVIDIA’s Blackwell architecture.

How It Works:

Traditional inference requires moving 16 bits of data for every calculation. FP4 reduces this to just 4 bits. In theory, this quadruples the amount of data you can move and process per second. The Transformer Engine inside Blackwell GPUs dynamically adjusts precision, utilizing FP4 for the majority of the inference workload while retaining higher precision (FP16/FP8) for layers that are sensitive to accuracy drops.

A common misconception is that lower precision equals “dumb” models. This is false. Research shows that with advanced quantization techniques, large models retain over 99% of their reasoning capability at FP4, while running 2x-4x faster. This improvement turns massive models (like GPT-4 class systems) from slow, batch-processed giants into real-time interactive agents. For a broader look at how these models are applied in business, read our guide on LLM vs Traditional ML models for business automation.

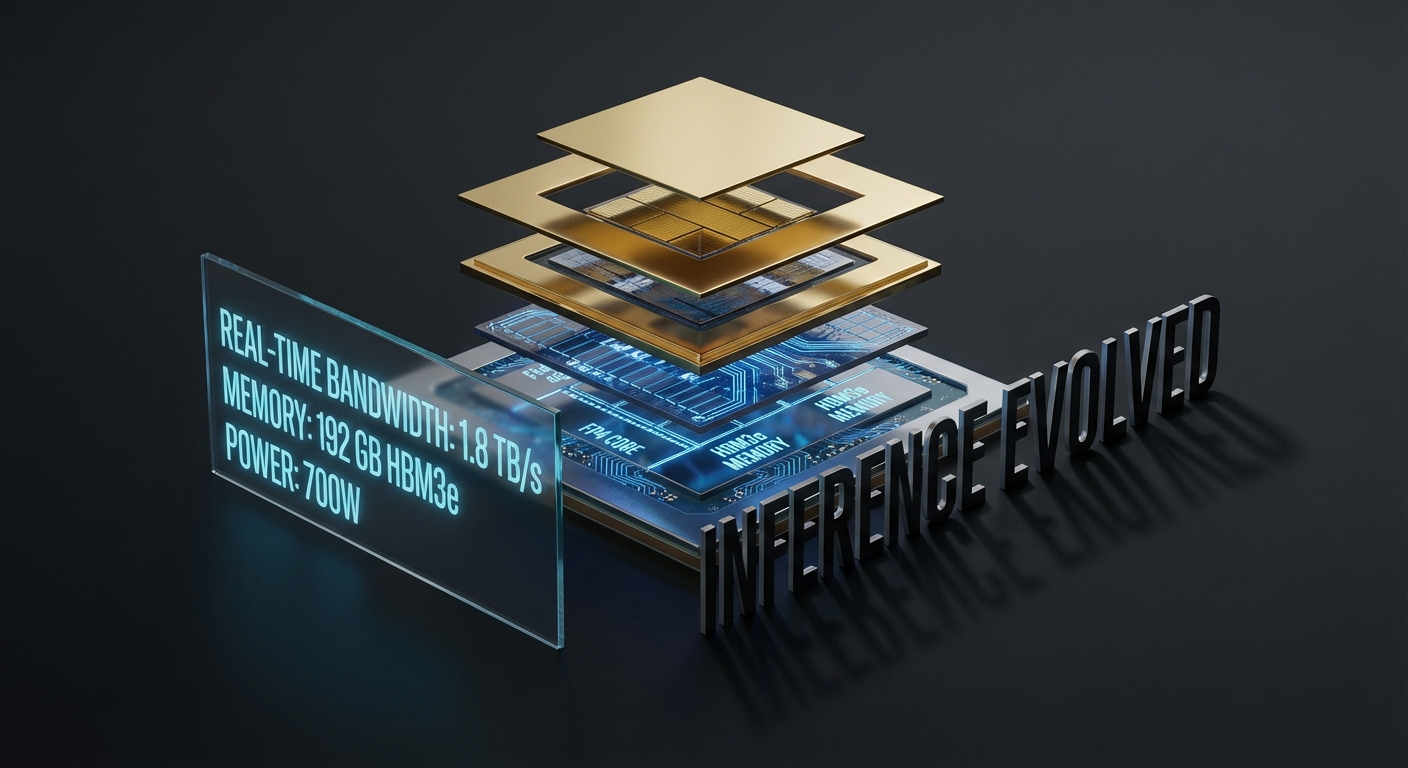

2. Memory Wall: Why HBM3e is the New Gold Standard

In AI inference, specifically for Generative AI, compute power is rarely the bottleneck; memory bandwidth is. When you chat with a bot, the GPU spends most of its time waiting for data to travel from its VRAM to its cores. This phenomenon is known as the “Memory Wall.”

To combat this, the latest architectures have aggressively adopted HBM3e (High Bandwidth Memory 3e). This newer standard pushes bandwidth speeds significantly higher than the previous HBM3 used in the H100.

Why This Matters:

- Tokens Per Second: The speed at which an LLM “types” its answer is directly correlated to memory bandwidth. HBM3e allows for smoother, conversational latency.

- Batch Size: Higher bandwidth allows the GPU to serve more users simultaneously without queuing them, drastically reducing the cost-per-query.

If you are evaluating hardware for a data center, ignore the TFLOPS (compute speed) marketing. Instead, look at the Memory Bandwidth (TB/s) metric. That is the true governor of inference performance in 2025.

3. Intel Crescent Island: The High-Capacity Challenger

While NVIDIA dominates the training landscape, Intel has carved out a massive niche in inference with its Crescent Island series (based on the Xe3P microarchitecture). Intel identified a specific pain point: Memory Capacity.

Many enterprise models don’t need the raw speed of an H100; they just need to fit in memory. If a model is too big for a GPU’s VRAM, it must be split across multiple cards, which introduces latency and explodes costs. Intel’s Crescent Island GPUs offer up to 160 GB of LPDDR5X memory on a single card. According to Intel’s newsroom, this allows companies to run massive 70B+ parameter models on a single device, drastically lowering the Total Cost of Ownership (TCO) for inference servers.

Strategic Takeaway: If your workload involves batch-processing massive documents where real-time millisecond latency isn’t critical, but cost is, high-capacity cards like Crescent Island are often superior to the bandwidth-focused NVIDIA counterparts.

4. Local Inference: The RTX 5090 & Prosumer Power

Not every AI workload runs in a hyperscale data center. The rise of “Local LLMs” (running models like Llama 3 on your own PC) has driven demand for powerful prosumer cards. Enter the NVIDIA GeForce RTX 5090.

While technically a consumer card, the 5090 features architectural trickle-down from the data center, including huge improvements in tensor core throughput and memory speeds (GDDR7). For researchers and developers, this card bridges the gap, allowing for the fine-tuning and serving of mid-sized models (up to 30B parameters) locally without paying cloud rent.

Recommended Solution: The Ultimate Local Lab

If you are building a workstation to test these new architectures locally, the RTX 5090 is the current ceiling of performance.

5. Disaggregated Serving: Separating Prefill from Decode

The final architectural shift isn’t just about the chip; it’s about how the chips are used together. This is the concept of Disaggregated Inference, championed by Google Cloud’s TPU vLLM updates and NVIDIA’s HGX platforms.

The Mechanism:

LLM inference has two distinct phases:

1. Prefill: The GPU processes your input prompt. This is compute-heavy and benefits from massive parallel processing.

2. Decode: The GPU generates the answer one word at a time. This is memory-heavy and serial.

In traditional setups, one GPU does both, leading to inefficiency. Google Cloud’s latest AI Hypercomputer architecture allows systems to send the “Prefill” work to a compute-optimized chip (like a TPU) and the “Decode” work to a memory-optimized chip. This specialization ensures that no part of the hardware sits idle, improving overall system throughput by up to 3x compared to monolithic deployments.

Frequently Asked Questions

What is the difference between FP4 and FP8 for AI inference?

FP8 uses 8 bits of data per calculation, while FP4 uses only 4 bits. FP4 is twice as fast and uses half the memory bandwidth, but it requires specialized hardware (like NVIDIA Blackwell) and advanced quantization software to maintain model accuracy.

Can I use an RTX 4090 or 5090 for enterprise AI inference?

Yes, for small to medium-scale deployments. They offer incredible value for money. However, they lack the massive VRAM (80GB+) and interconnect speeds (NVLink) of data center cards like the H100, making them less suitable for training or serving 100B+ parameter models efficiently.

Is memory bandwidth more important than CUDA cores?

For inference (generating text), yes. The speed of text generation is almost always limited by how fast data can move from memory to the chip. For training, CUDA cores (compute power) are generally more critical.

What is a TPU and is it better than a GPU?

A TPU (Tensor Processing Unit) is Google’s custom AI chip. TPUs are often more cost-effective for massive, specific workloads like running Google’s own models (Gemini/PaLM), while GPUs offer more flexibility for running a wide variety of open-source models.

What does ‘quantization’ mean in GPU architecture?

Quantization is the process of reducing the precision of the numbers used in a model (e.g., from 16-bit to 4-bit) to reduce memory usage and increase speed. Modern GPUs have specific “engines” designed to accelerate these lower-precision calculations physically.