- Direct Answer: What Defines the 2026 Inference Architecture?

- 1. Vera Rubin Architecture: Breaking the Memory Wall

- 2. The Bluefield-4 DPU: Why Offloading is the New Overclocking

- 3. NVIDIA Dynamo: The “Hidden” 5x Multiplier

- 4. The Thermodynamics of 2026: Why Air Cooling is Dead

- 5. Recommended Solutions: Starting with Edge Inference

- Frequently Asked Questions

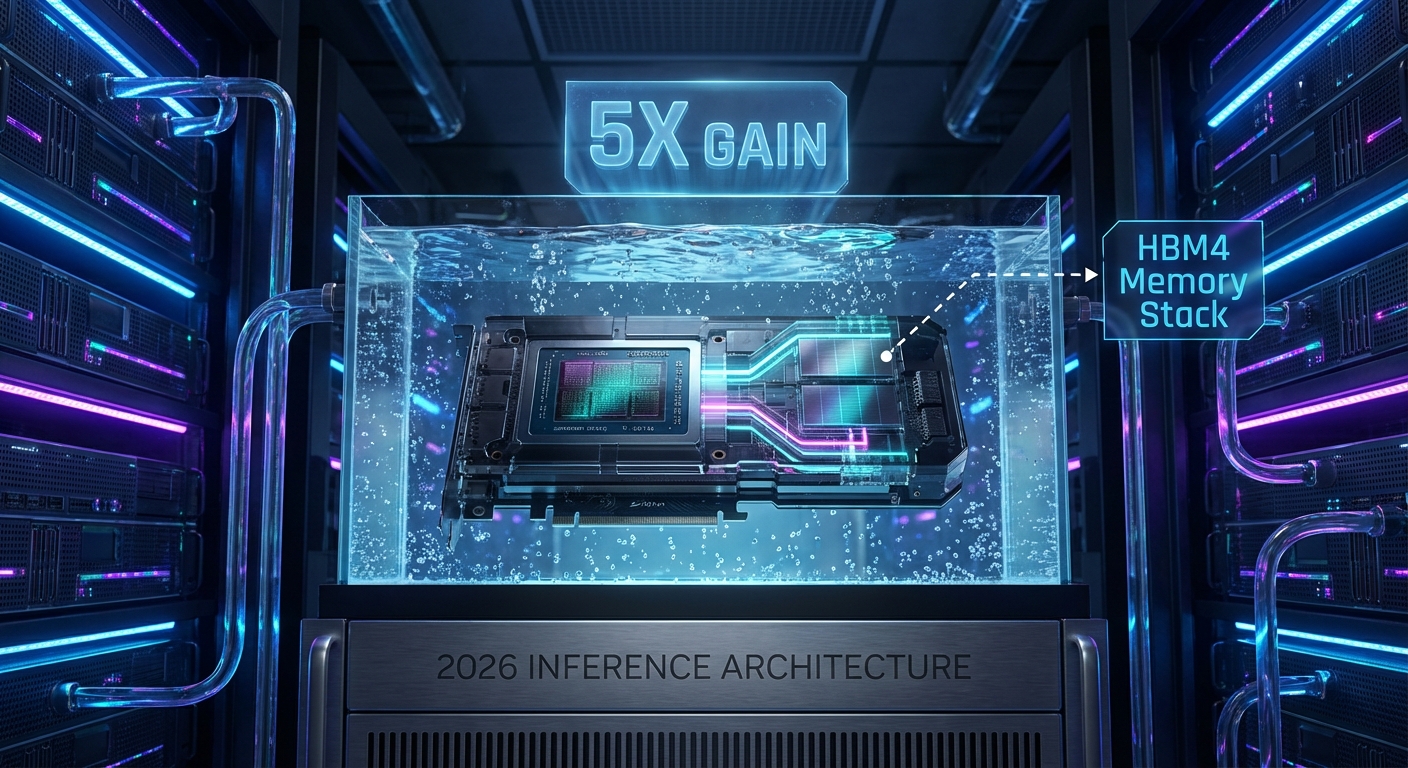

The latest GPU architecture improvements for AI inference center on NVIDIA’s upcoming Vera Rubin platform and the Bluefield-4 DPU. Unlike previous generations focused on raw training compute, these 2026 advancements target the “Memory Wall” by utilizing HBM4 high-bandwidth memory and the NVIDIA Dynamo software stack, which delivers up to 5x performance gains over the Blackwell architecture specifically for inference tasks. The shift is further defined by a mandatory move to liquid-cooled infrastructure (GB300 NVL72) to manage the thermal density of gigawatt-scale AI factories.

1. Vera Rubin Architecture: Breaking the Memory Wall

In 2025, the industry focused on the NVIDIA Blackwell B200 vs AMD MI325X benchmarks, which were largely defined by training throughput. However, the 2026 roadmap, spearheaded by the Vera Rubin architecture, marks a decisive pivot toward Inference-First Design.

The Core Bottleneck: Memory Bandwidth

The primary challenge in deploying Large Language Models (LLMs) isn’t calculating the answer; it’s moving the massive model weights from memory to the compute cores. This is known as the “Memory Wall.” The Rubin architecture addresses this by integrating HBM4 (High Bandwidth Memory 4). Unlike HBM3e, HBM4 features a wider interface and allows for direct logic stacking on the memory die.

Why It Matters:

This architectural shift allows the GPU to hold significantly larger context windows in active memory. For enterprise users, this means a single rack of Rubin GPUs can serve thousands of concurrent users with lower latency than a room full of Hopper H100s. As noted in NVIDIA’s inference whitepaper, optimizing for this memory bandwidth is the single most effective way to reduce the total cost of ownership (TCO) for generative AI deployment.

2. The Bluefield-4 DPU: Why Offloading is the New Overclocking

A common misconception is that the GPU does everything. In reality, a significant portion of a GPU’s power is wasted on overhead tasks like network packet processing, security encryption, and storage management. The Bluefield-4 Data Processing Unit (DPU) is designed to reclaim this lost efficiency.

The Mechanism of Offloading:

The Bluefield-4 acts as a “traffic cop” for the AI server. It sits between the network and the GPU, handling 100% of the data ingestion and security protocols. By offloading these tasks, the main Vera Rubin GPU is free to dedicate its entire transistor budget to matrix multiplication (the math behind AI).

This is crucial for the 2026 hardware landscape, where efficiency per watt will determine profitability. By decoupling infrastructure management from inference compute, data centers can achieve the theoretical “30x energy efficiency” touted in recent infrastructure reports.

3. NVIDIA Dynamo: The “Hidden” 5x Multiplier

Hardware is useless without the software to drive it. The most aggressive claim for the 2026 cycle is the 5x inference performance gain over Blackwell, attributed largely to the NVIDIA Dynamo software architecture.

How Dynamo Works:

Dynamo is an evolution of engines like TensorRT-LLM. It utilizes a technique called Just-in-Time (JIT) Compilation for Inference Graphs. Traditionally, AI models execute layer by layer. Dynamo analyzes the entire model graph beforehand and fuses multiple operations into a single kernel execution. This minimizes the “handshake” time between the CPU and GPU.

According to SDX Central, 2026 will be the year inference revenue overtakes training revenue. Tools like Dynamo are the catalyst for this shift, making it economically viable to deploy massive trillion-parameter models for everyday applications rather than just research demos.

4. The Thermodynamics of 2026: Why Air Cooling is Dead

The days of air-cooled server racks are effectively over. The density required by the GB300 NVL72 systems and the Vera Rubin platform pushes thermal output beyond the physical limits of fans. Liquid cooling is no longer an enthusiast feature; it is an industrial mandate.

The Shift to Gigawatt-Scale Factories:

Future AI data centers are being designed as “Gigawatt Factories.” To support this, liquid cooling plates are integrated directly onto the silicon dies. This allows the chips to run at sustained peak clock speeds without thermal throttling. For a deeper technical look at how these thermal designs influence performance, refer to our deep dive on 2025 GPU architecture improvements.

The Efficiency Paradox:

While liquid cooling sounds energy-intensive, it is actually more efficient. Liquid transports heat 24 times more effectively than air, allowing data centers to eliminate power-hungry air conditioning units (CRACs) in favor of passive heat exchange loops.

5. Recommended Solutions: Starting with Edge Inference

While the Vera Rubin and Blackwell architectures power the cloud, developers need accessible hardware to build and test inference applications today. The principles of CUDA optimization and memory management remain the same whether you are on a $200,000 server or a $200 dev kit.

For Developers & Prototyping: NVIDIA Jetson Orin Nano

This is the industry standard for “Edge AI.” If you are building robots, drones, or smart cameras that need to run inference locally (without an internet connection), this is the starting point. It features the same Ampere architecture found in larger enterprise cards but scaled for 7-15 watts of power.

For High-End Workstations: NVIDIA Jetson AGX Orin

For developers needing server-class performance in a desktop form factor, the AGX Orin offers up to 275 TOPS of AI performance. This allows for the simulation of complex transformer models before deploying them to the expensive cloud instances.

Frequently Asked Questions

What is the difference between Vera Rubin and Blackwell architectures?

Blackwell (2024/2025) focused on bridging the gap between training and inference with FP4 precision. Vera Rubin (2026) is designed specifically to solve the “Memory Wall,” utilizing HBM4 memory and dedicated offloading DPUs to maximize inference throughput for massive user bases.

How does the Bluefield-4 DPU improve performance?

The Bluefield-4 DPU offloads infrastructure tasks—like networking, storage management, and security encryption—from the main GPU. This frees up the GPU’s compute resources to focus entirely on running AI models, resulting in higher efficiency and lower latency.

Why is liquid cooling required for new AI chips?

Modern AI chips like the GB200 and upcoming Rubin GPUs pack billions of transistors into a tiny area, creating extreme heat density. Traditional air fans cannot remove this heat fast enough. Liquid cooling is 24x more efficient at heat transfer, ensuring the chips don’t melt under load.

What is HBM4 memory?

HBM4 (High Bandwidth Memory 4) is the next generation of GPU memory. It offers a wider interface (2048-bit compared to 1024-bit) and allows logic dies to be stacked directly on the memory. This drastically increases the speed at which data moves between memory and the compute core.

Will these improvements affect consumer graphics cards?

Eventually, yes. Technologies pioneered in data centers, like DLSS (Deep Learning Super Sampling) and specialized Tensor Cores, always trickle down to consumer GeForce cards (e.g., the RTX 50-series and 60-series) to improve gaming and local AI creation.